First ungrading assessment

Here’s the initial assessment of the new ungrading and feedback pedagogy that the TAs and myself have implemented this academic year (2022-2023). In a nutshell, we decided to not mark any of the weekly test and maximise opportunities for feedback to favour student’s self-assessment and reflection on their own work (meta-cognition). Read the full post to read about the reasons and opportunities for dropping grading the weekly tests and maximise feedback with students.

This initial assessment is split into several parts, describing different sources of data used for the assessment. The different parts have been added over different times. Part 1 was originally added on 15 December 2022 and is based on direct, free-form informal and semi-formal feedback. Part 2 is based on on-line evaluation forms that students were invited to fill out and was published on 27 December 2022.

Part 1

Third bachelor course

Let’s start with the third bachelor student’s feedback, collected as part of the ‘Year committee’, where student representatives meet with the professors and share the feedback they collected among all students. This is a cohort that experienced the previous approach, where each weekly test was graded and students that earned a decent weighted average would get a dispense for the final exam1.

-

While some students did prefer being kept on their toes with marking, the majority appreciate dropping marking to reduce stress/pressure during weekly tests.

-

The general pedagogy and the feedback are well received. In particular, students like the post-it notes for in-class interactions and feedback, and the possibility to book one-to-one sessions to get individual feedback.

-

This year, we also have the support of a third TA, which helps a lot to provide prompt technical help and answer questions without delay during class.

The feedback we gathered on the post-it notes after the last lecture and our subjective appreciation (based on the perceived motivation of students, and the quantity and quality of their questions) is along the same lines. Some students already mentioned on the post-it notes that they were looking forward to next year’s (optional) Master’s course, which is a strong argument in favour of our strategy.

First masters course

This year, for the first time, students were asked to self-mark during the final oral exam. They all provided a fair assessment of their work, at times even slightly lower that what they really deserved (in which case we obviously bumped the mark accordingly). I also systematically asked what they thought they could have improved and if more time would have helped. Interestingly, the topic of the last project/presentations, which was a more open-ended and creative task, was one point that came up repeatedly, with analysis approaches that they didn’t think of but thought they should have.

We also considered some further updates for next year, to promote feedback and allow students to act specifically on that feedback. For the first report, that focuses on a hands-on analysis of RNA-Seq data, here are the steps that we plan to implement:

- After receiving their data, students will give a first short presentation focusing on introducing their dataset, the experimental design, the biological question(s) they want to focus on, and the associated statistical model(s). This is a first opportunity for feedback and to make sure we catch any misdirections early on.

- A full 4-hour session dedicated to questions and answers on their data, the corresponding chapters, and how to prepare the report.

- A report, written in R markdown and compiled in pdf, detailing the analyses introduced in the presentation above (point 1).

- We will read and annotate the reports, and provide an individual feedback sheet including a short section with positive points, a short section with possible improvements and a list of questions. The questions we will ask during the end-of-term oral exam (point 6 below) will be among those in this list, so that students can prepare beforehand and thus address any short-comings in their respective reports.

- Each students will receive another report to read and provide constructive comments. This will allow them to explore how others have addressed their project and experience how to critically read and assess another person’s work (and thus reflect on their own contribution).

- An oral exam to offer the students an opportunity to answer (some of) the questions we handed them (point 4).

As every year so far, I have also asked for students to comment on one aspect of the course they particularly appreciated and one that they did less, as well as an assessment of the amount of work they had to invest. This is interesting as it provides them with an opportunity to reflect on the whole course and discuss their impressions with us, which we use to update and improve the course.

Next assessments

We need to consider whether the positive impact that we seem to observe in the third bachelor’s course also translates into a better success rate in the exam. I do have to admit that this makes me slightly uncomfortable. What if we were to see a negative impact (which, honestly, I doubt)? It wouldn’t necessarily mean that we did worse, given that we have applied our new strategy on a single cohort. This obviously also applied if we get better results - a better or worse success rate might be the result other confounding factors. However, this cohort will be the only that has experienced the change in teaching strategy, and hence probably an ideal situation for a direct assessment. If I’m being honest, I wouldn’t want to teach to the test even if exam results were provable worse, for the many reasons underlying the new ungrading and feedback strategy.

Next term, we start with a brand new second bachelor cohort. This will also be an interesting experience, as they will be immediately exposed to this ungrading and feedback strategy (with us having a term’s worth experience), and will experience and adapt2 to it over two years.

Part 2

On-line questionnaire

This second part of the assessment looks at on-line evaluation forms that 3rd bachelor students are asked to fill out. There are two types of forms: one for the teaching unit (i.e the theoretical part of the course) and a second one for the practical sessions. These evaluation forms can be requested by the instructor or the faculty (and are indeed asked for every three years by the latter, if I remember well). I have three forms available, for two different cohorts.

-

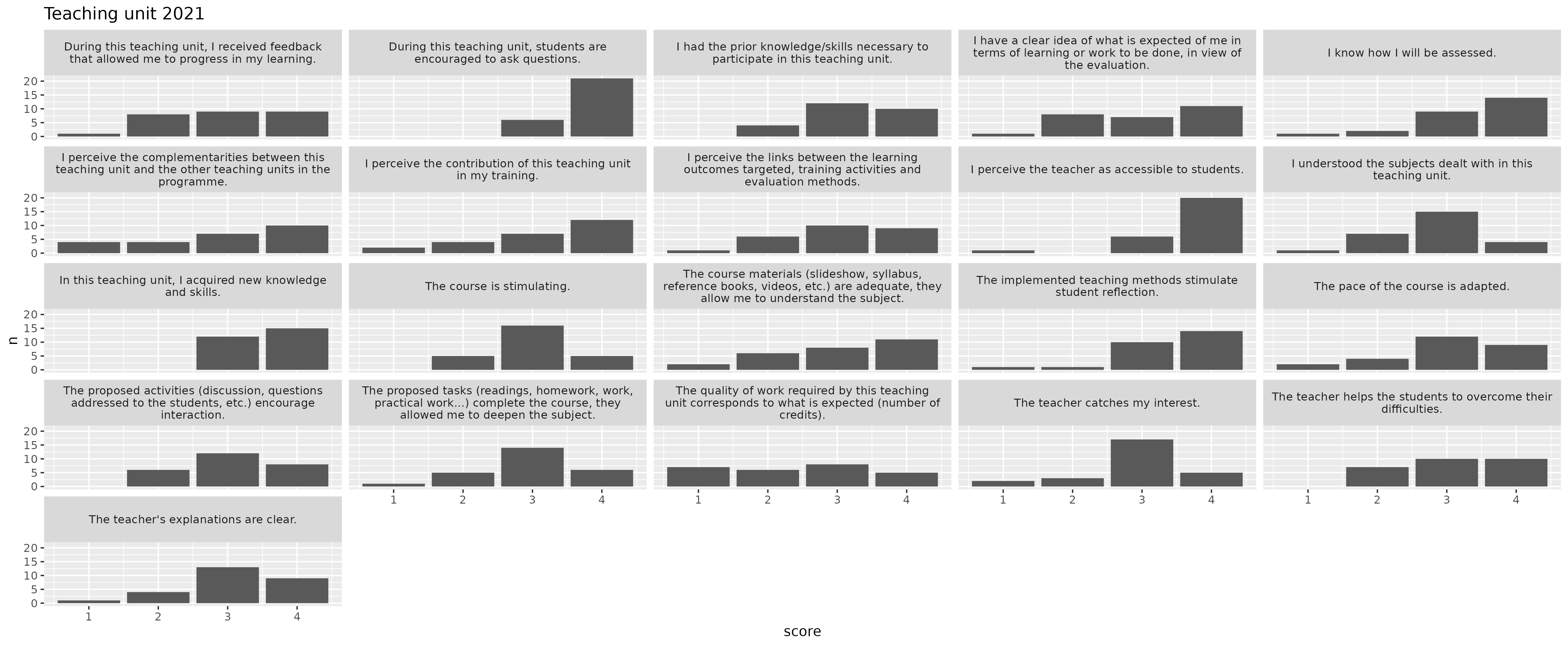

Evaluations for the teaching unit from 2021. That’s one that I requested myself. Given that my courses blend theory and practice, I only requested the teaching unit questions, asking students to fill out the form considering both aspects. This form was completed by 27 students (individual questions were answered by 25 to 27 students), corresponding to half of the class.

-

Evaluations for the teaching unit and practicals from 2022. These were requested by the faculty, hence for both parts. The requests and links to the forms where received after completion of the course, and I was only able to inform and remind students through forum announcements, hence a low participation rate: only 7 students (with one question getting 6 answers), corresponding to just under 12% of the full cohort. This probably induces some bias, where only the most committed students, and hence those more likely to provide positive feedback, participated.

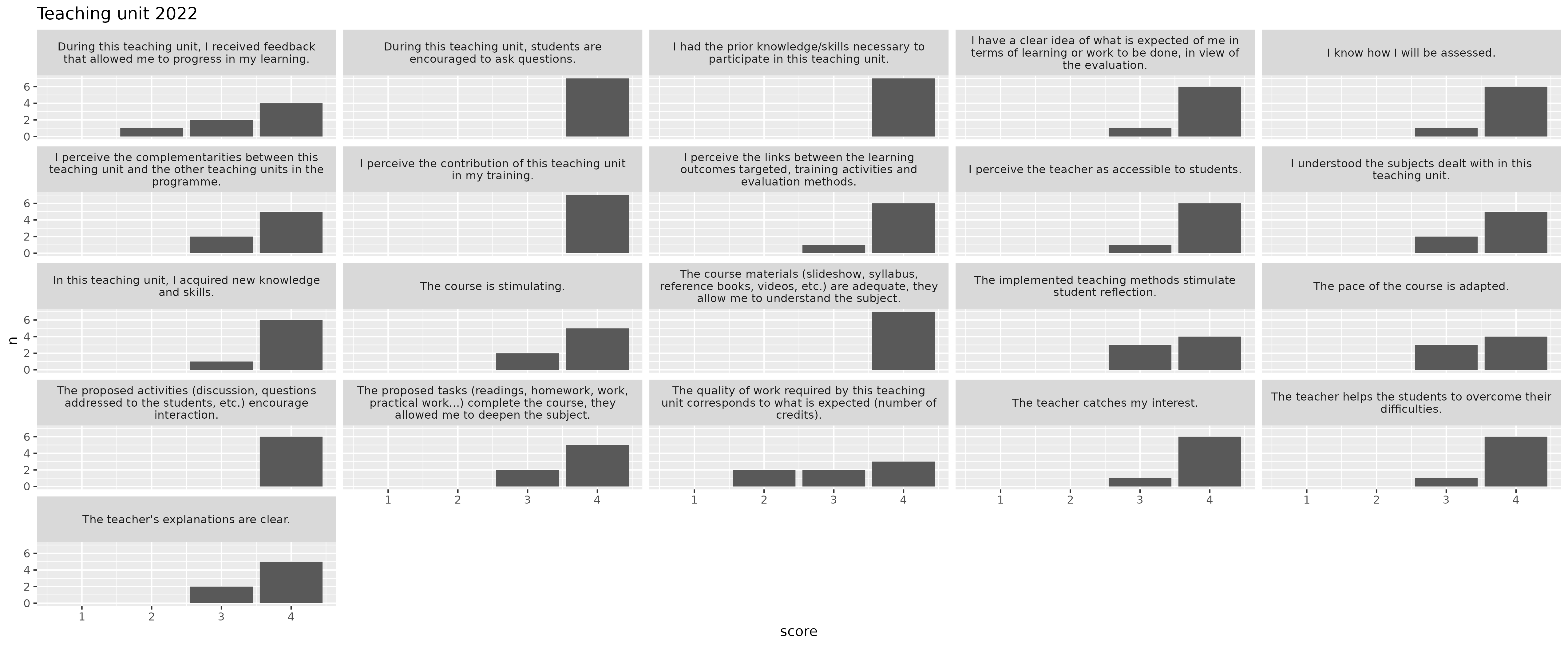

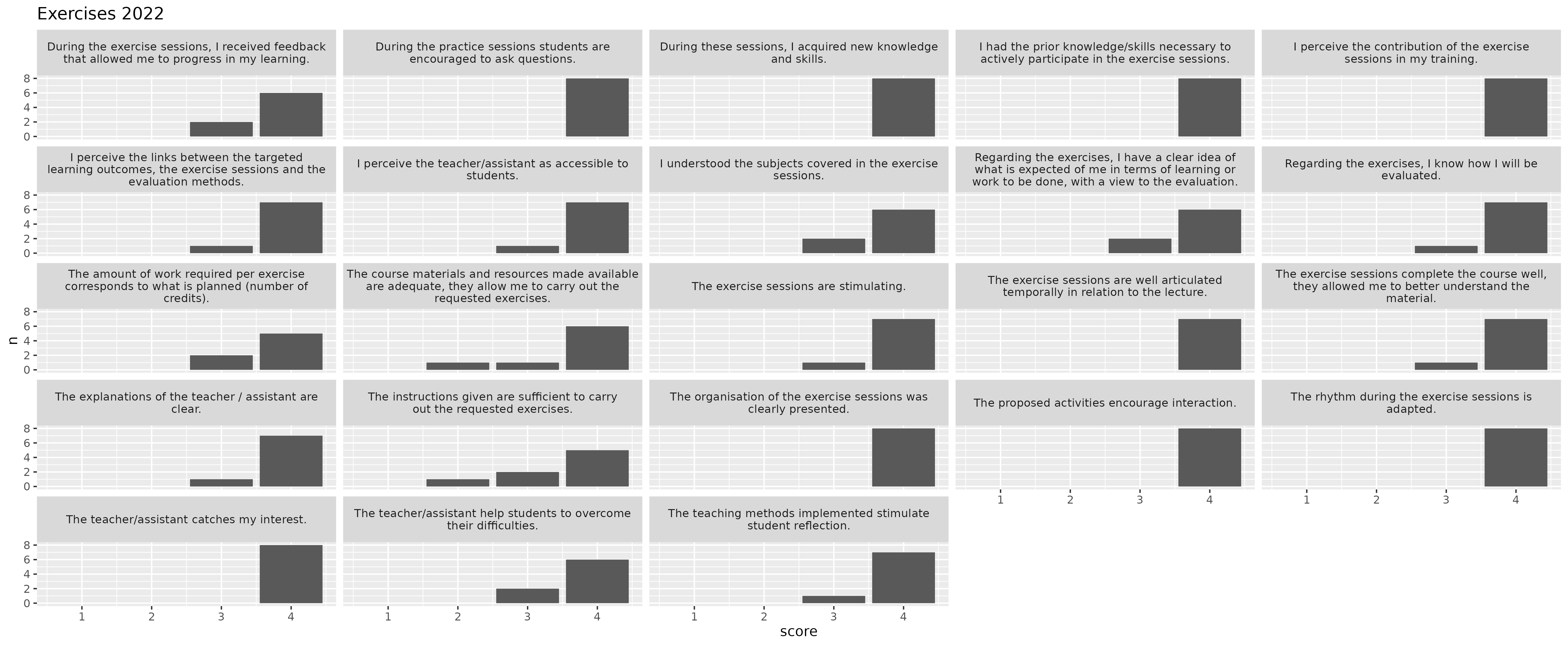

Here are the score for the 2022 teaching unit and practical sessions. The student answer each question3 by providing a score from 1 (Don’t agree at all) to 4 (Totally agree).

These evaluation are quite good, especially when compared to 2021. Below are the questions for the teaching unit of that year.

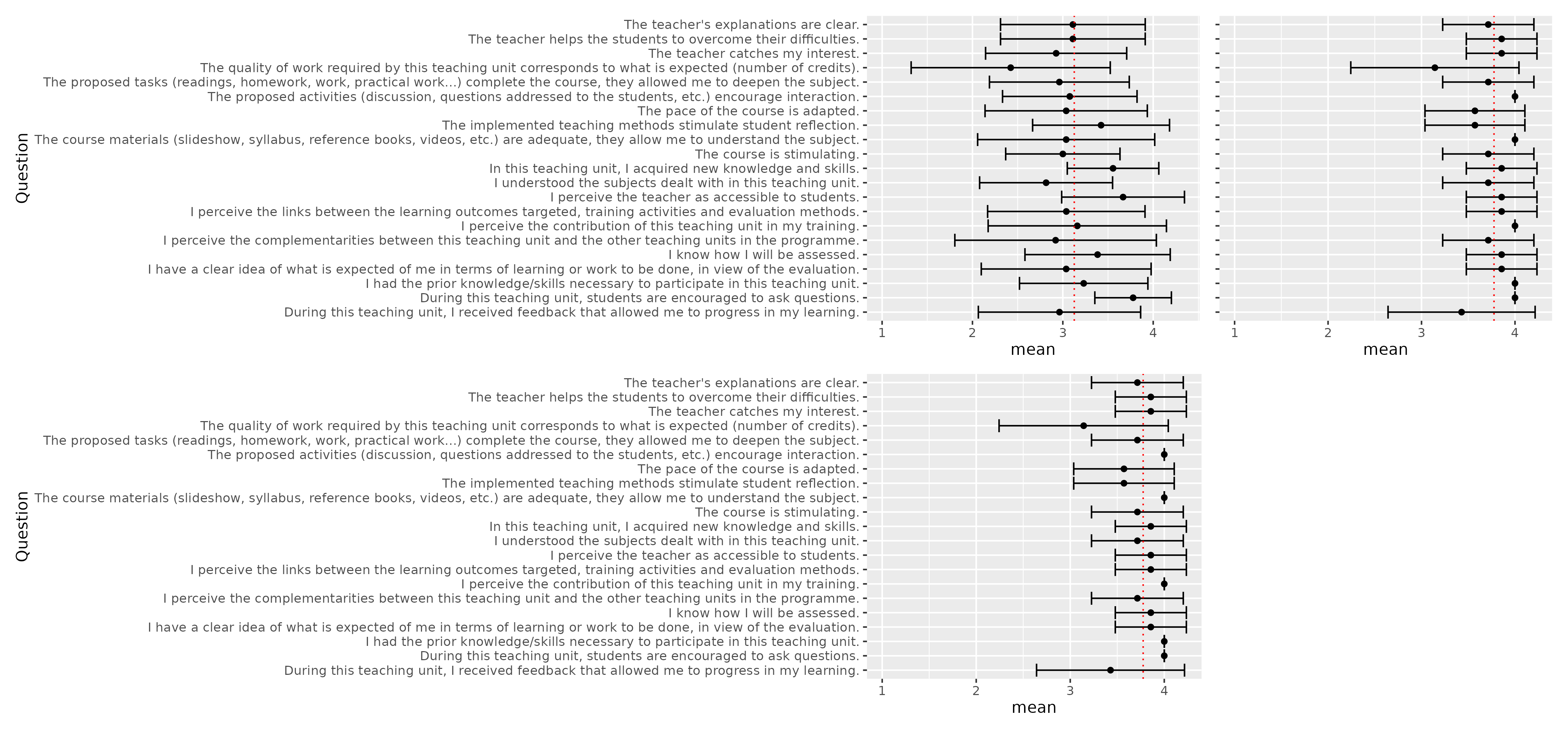

I also computed the mean scores and standard deviations per question to directly compare the 2021 and 2022 results. The figure below show the 2021 results in the top left panel and the 2022 results in the top right and bottom left panels. The top right panel is helpful to compare individual questions and the bottom panel to get a more general assessment of the 2021 vs 2022 scores. The dotted red line represents the yearly mean score.

The improvement is striking, to say the least. There are however two points to keep in mind:

- In 2022, given that the evaluation request came in late, it is possible that only the most committed students participated, hence boosting these scores up.

- The 2021 cohort had a hard time during the COVID lock-down. They had to follow the second bachelor course (a prerequisite to the one evaluated above) remotely, which was hard on them and is likely to reduce the overall score.

Even considering the above possible confounding factors, I am tempted to take the figures from the student on-line evaluations as a strong endorsement for the new teaching strategy. Hopefully, this will also materialise in a likely part 3 to this post, describing the final exam results.

-

The latter does read like a nice opportunity for students but do read the motivation for dropping this, and learn about the perverse incentives of such an approach. ↩

-

The word adapting is quite important here. Students adapt at whatever the system throws at them, all too often for the worse… here, I hope for the better. What we propose is quite different from what they are used to, so it does take some adaptation. ↩

-

Original question are in French and were translated in English with Google translate, with minimal editing. ↩